Kubernetes at the (homelab) Edge

- Switching to Kubernetes

- Kubernetes at the (homelab) Edge (you are here)

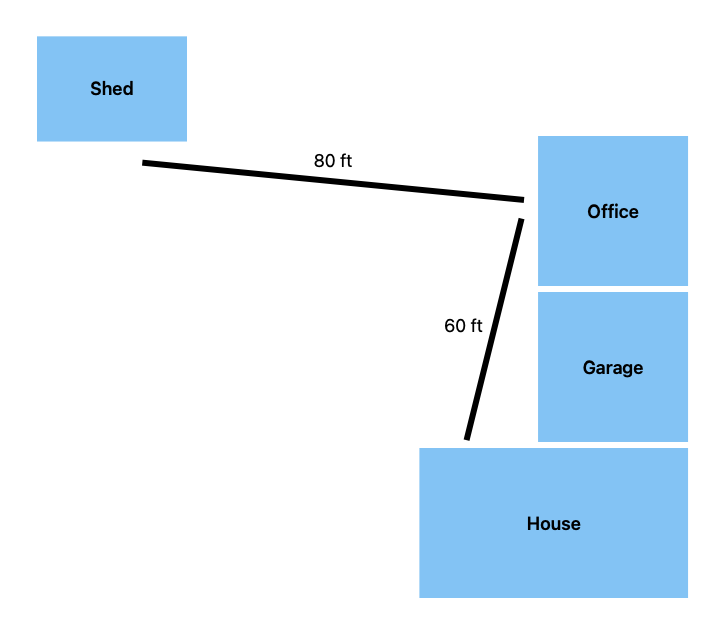

As I've mentioned before, the RF environment in my house is difficult. The house layout is roughly:

- single level house, half of which has a basement under it

- single level office / mother-in-law-suite / ADU / whatever you want it

- two car garage in between

- shed in the back yard

The house and ADU are built out of cinder block and brick on the outside and plasterboard (not drywall) on the inside with foil-backed insulation sandwiched in between. The garage is built in between the two buildings, half with the same cinder block and brick construction and half with more modern stick construction.

These buildings were built in the 1950s when labor was cheap, longevity was valued, and AM radio stations were extremely powerful.

The shed is just an ordinary stick and OSB sheathing shed, but it's quite a distance from the house proper.

I consider the three buildings and the garage as separate "RF zones", for lack of a better word. Zigbee and Wi-Fi at 2.4GHz and Wi-Fi 5 at 5GHz do not propagate through the block and foil very well or at all. Z-Wave (900MHz) has a slightly better time but because the house is so spread out and Z-Wave has a fairly low hop limit for mesh packets (4 hops, vs at least 15 for Zigbee) repeaters don't work very well. Lutron Caseta (434MHz) has phenominal range and penetrates the foil with zero issues, but the device variety is severely limited. In particular, there are no Caseta smart locks.

Each zone has:

- At least one Wi-Fi access point

- A Z-Wave hub

- (sometimes) a Zigbee hub

- (sometimes) RS232 or RS485 to USB converters for equipment like our generator and furnace

Previous Solutions

The Z-Wave and Zigbee "hubs" are mostly just USB sticks stuck into a free port on a Dell Wyse 3040 thin client. I've had these little machines running for almost five years through a few different setups.

First, I had ser2net running over Tailscale and had the gateway software, i.e. zwave-js-ui and zigbee2mqtt, running on a server in my house.

This was fine, until I had some significant clock skew when a node rebooted (i.e. the CMOS battery was dead and the hunk of junk thought it was 2016) and Tailscale refused to start because it thought the SSL certificate for the Tailscale control plane wasn't valid.

The second draft was to just run the gateway software directly on the 3040s. This actually works fine. The 3040s are capable machines, roughly comparable to a Raspberry Pi 4, so they could run a little javascript program just fine. It was somewhat less responsive than running the gateway on the server, though.

The third version of this is to use a hardware gateway. I'm currently using one for Zigbee because the location of the house zone's 3040 is not ideal for some important Zigbee devices and they lose connection a lot. I positioned the hardware gateway in a spot that has good Wi-Fi coverage but no Ethernet port and now those devices are rock solid.

But what if Kubernetes?

While rolling out Kubernetes I didn't really plan on converting the 3040s because running the gateway software on them was working fine. Just as an experiment I attempted to install Talos on one of my spares. Amazingly, it worked great after making one tweak to the install image. The 3040s are very particular about certain things and they, like many of the SBCs that Talos supports, don't like the swanky console dashboard. After turning that off the machine came right up as a Kubernetes node in the cluster.

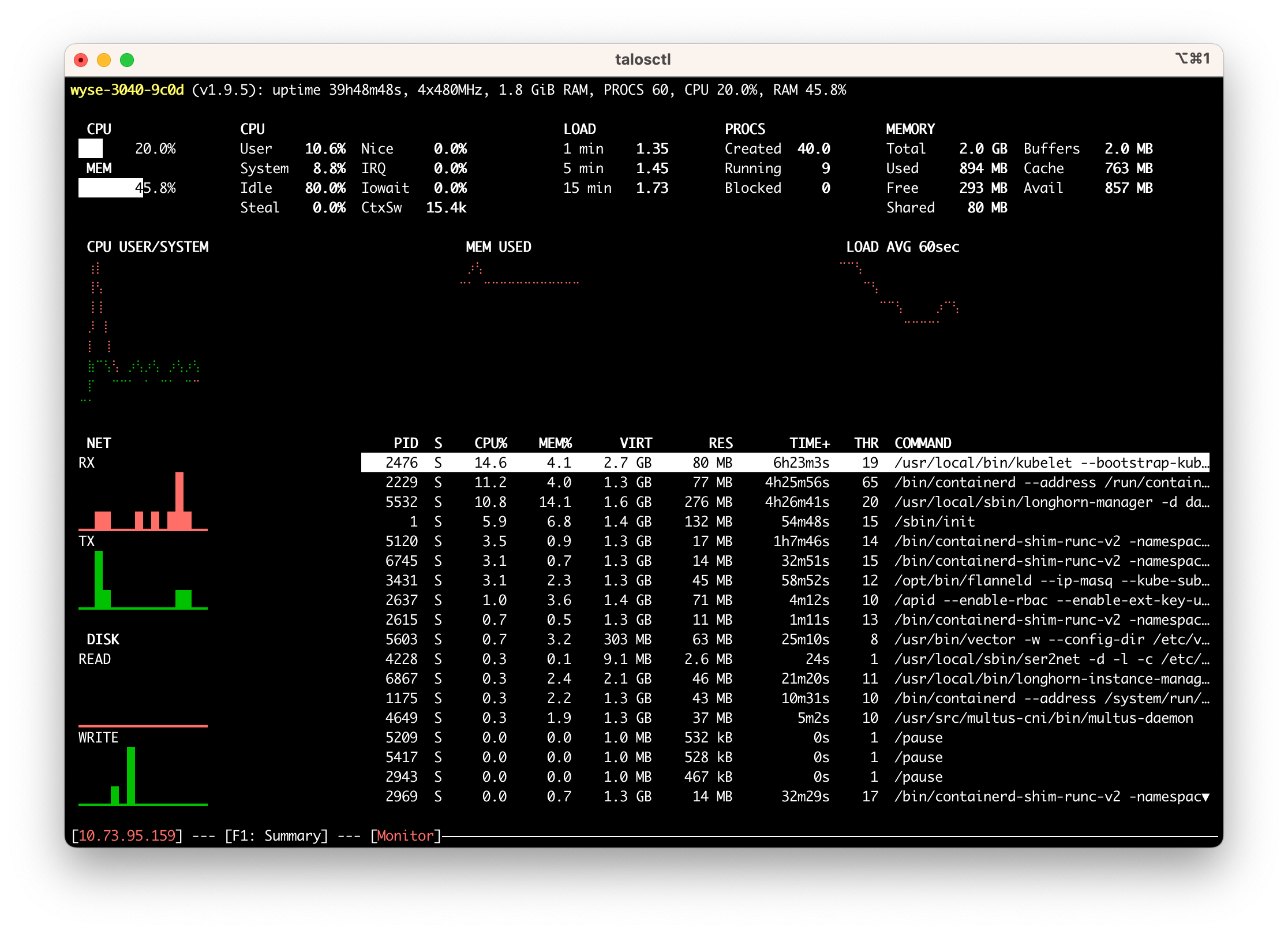

At idle the Kubernetes workloads plus my cluster's standard DaemonSet pods use about 40% of the machine's 2GiB of memory and roughly 30% of CPU.

That leaves way more than enough to run ser2net.

Automatic ser2net Config

I initially thought that I would use Akri to spawn ser2net. Akri is a project that came out of Microsoft that acts as a generic device plugin for Kubernetes as well as managing what they call "brokers", which are just programs that attach to whatever device and provide it to the cluster.

That sounded perfect for my purposes so I set it up and let it bake for a few days. It did not go well.

The big problem is that Akri is just not very stable. Things were randomly falling over in such a way that the Akri-managed Z-Wave ser2net brokers would crash loop overnight. I made no debugging progress so I started on my fallback idea: automatically managing a ser2net config.

Realistically, my needs are simple:

- I want to present one or more serial devices to the network.

- I want this to be reliable.

- I want this to be secure.

- I don't want to micromanage it.

It turns out, discovering USB serial devices on linux is actually pretty trivial. You just have to follow a bunch of symlinks.

This shell script is based on logic found in the go-serial project:

set -e

set -x

# Find all of the TTYs with names we might be interested in

ttys=$(ls /sys/class/tty | egrep '(ttyS|ttyHS|ttyUSB|ttyACM|ttyAMA|rfcomm|ttyO|ttymxc)[0-9]{1,3}')

for tty in $ttys; do

# follow the symlink to find the real device

realDevice=$(readlink -f /sys/class/tty/$tty/device)

# what subsystem is it?

subsystem=$(basename $(readlink -f $realDevice/subsystem))

# locate the directory where the usb information is

usbdir=""

if [ "$subsystem" = "usb-serial" ]; then

# usb-serial is two levels up from the tty

usbdir=$(dirname $(dirname $realDevice))

elif [ "$subsystem" = "usb" ]; then

# regular usb is one level up from the tty

usbdir=$(dirname $realDevice)

else

# we don't care about this device

continue

fi

# read the productId and vendorId attributes from the USB device

productId=$(cat $usbdir/idProduct)

vendorId=$(cat $usbdir/idVendor)

snippetFile="$vendorId:$productId.yaml"

if [ -f "$snippetFile" ]; then

sed "s/DEVNODE/\/dev\/$tty/" $snippetFile

fi

done

The last few lines of the loop body look for a YAML snippet available for the specific vendorId/productId pair.

If there is one, replace the constant DEVNODE with the actual device path and write it to stdout.

Here's what one of the snippets looks like:

# zigbee

connection: &zigbee

accepter: tcp,6639

connector: serialdev,DEVNODE,115200n81,local,dtr=off,rts=off

options:

kickolduser: true

This snippet tells ser2net to open a listening connection on TCP port 6639 and wire it to a serial device at path DEVNODE.

Use 115200n81 parity and keep the dtr and rts bits off (this is specific to the Sonoff zigbee stick I'm using).

Further, when a new connection opens immediately kick off the old one.

I drop the above script, the YAML snippets, and this simple entrypoint.sh script into a container image based on the standard ser2net container.

#!/bin/bash

set -e

set -x

set -o pipefail

echo "Generating ser2net.yaml"

mkdir -p /etc/ser2net

./discover.sh > /etc/ser2net/ser2net.yaml

echo "Running ser2net"

cat /etc/ser2net/ser2net.yaml

exec ser2net -d -l -c /etc/ser2net/ser2net.yaml

I then deploy it to my cluster as a DaemonSet targeting nodes labeled with keen.land/serials=true:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: ser2net

namespace: iot-system

labels:

app: ser2net

spec:

selector:

matchLabels:

app: ser2net

template:

metadata:

labels:

app: ser2net

spec:

nodeSelector:

keen.land/serials: "true"

volumes:

- name: devices

hostPath:

path: /dev

imagePullSecrets:

- name: ghcr.io

containers:

- name: ser2net

image: "ghcr.io/peterkeen/ser2net-auto:main"

securityContext:

privileged: true

volumeMounts:

- name: devices

mountPath: /dev

restartPolicy: Always

hostNetwork: true

The interesting things here are that the pod is running in privileged mode on the host network.

I thought about using container ports but then I would have to somehow know what DaemonSet pod name mapped to which host.

With hostNetwork: true I don't have to think about that and can just use the host's name in my gateway configs.

There's an opportunity here to cook up a custom deployment type with something like Metacontroller, which I have installed in the cluster but as of yet haven't done anything with.

Pros and Cons

So all of this work and what do I have? Basically what I had when I started:

- ser2net on the devices with the serial ports

- gateway software running on a server

The big pro of this setup is that I have one consistent management interface. I can set up and tear down ser2net with the exact same interface I use to set up and tear down everything else in the cluster.

There are a couple of cons, too. First, this probably uses a touch more power than the old solution because in addition to ser2net the Wyse 3040s are running all of the Kubernetes infrastructure. These things use so little power as is that I don't think it really matters, but it's worth pointing out.

Second, there's more to go wrong. Before this I had Alpine running basically nothing except ser2net. The system was static in practice, meaning that there was very little that could break.

Now there are several components running all the time that could break with a bad upgrade and could require me to take a crash cart out to each machine.

This is also putting more stress on the drives. All of these machines are booting off of significantly overprovisioned high endurance SD cards now so that shouldn't be an issue, but it's still something to keep in mind. The nice thing is that they're entirely stateless so swapping the card and reinstalling should be a quick operation.

Ultimately I think this is a good move and I plan to continue down the path of making every non-laptop device run on Kubernetes with very few exceptions.